Please refer to RP-213599 for detailed scope of the SI.

R1-2205695 Session notes for 9.2 (Study on Artificial Intelligence (AI)/Machine Learning (ML) for NR air interface) Ad-hoc Chair (CMCC) (rev of R1-2205572)

R1-2205021 Work plan for Rel-18 SI on AI and ML for NR air interface Qualcomm Incorporated

R1-2205022 TR skeleton for Rel-18 SI on AI and ML for NR air interface Qualcomm Incorporated

[109-e-R18-AI/ML-01] Juan (Qualcomm)

Email discussion and approval of TR skeleton for Rel-18 SI on AI/ML for NR air interface by May 13

R1-2205478 [109-e-R18-AI/ML-01] Email discussion and approval of TR skeleton for Rel-18 SI on AI/ML for NR air interface Moderator (Qualcomm Incorporated)

R1-2205476 TR 38.843 skeleton for Rel-18 SI on AI and ML for NR air interface Qualcomm Incorporated

Decision: As per email decision posted on May 22nd, the revised skeleton in R1-2205478 is still not stable. Discussion to continue in next meeting.

Including characterization of defining stages of AI/ML algorithm and associated complexity, UE-gNB collaboration, life cycle management, dataset(s), and notation/terminology. Also including any common aspects of evaluation methodology.

R1-2203280 General aspects of AI PHY framework Ericsson

· Proposal 5: Study the following three collaboration cases:

o Single-sided ML functionality at the gNB/NW only,

o Single-sided ML functionality at the UE only,

o Dual-sided joint ML functionality at both the UE and gNB/NW (joint operation).

Decision: The document is noted.

R1-2204570 ML terminology, descriptions, and collaboration framework Nokia, Nokia Shanghai Bell

· Proposal 1: RAN1 maintains a list of ML-related terms and definitions. Terminology in Annex A could be used as a starting point.

· Proposal 2: RAN1 agrees that the terms used in this study are valid only for the air interface, at the final stage, some adjustments in terminology may be needed with other 3GPP groups.

· Proposal 3: RAN1 at least to differentiate RL-based algorithms from other types of ML algorithms.

· Proposal 4: RAN1 will support only the collaboration-based solutions if they outperform implementation-based ML solutions and/or non-ML baselines.

· Proposal 5: RAN1 defines and maintains possible collaboration options and uses them to map the collaboration in the use-cases under study.

· Proposal 6: RAN1 to adopt a high level description of the ML-based solutions using a defined set of processing blocks, including at least the description of their input and outputs data, type of algorithm, hyperparameters, and control mechanisms used.

· Proposal 7: The RAN1 complexity comparison is to be performed between the different ML-enabled solutions proposed for the same function (sub-use case).

· Proposal 8: The RAN1 complexity estimation of an ML-enabled function should include the analysis of both training and inference operating modes.

· Proposal 9: RAN1 to consider including at least the following items in the complexity analysis of ML-enabled solutions:

o Training or (initial training/exploration for RL)

§ Number of floating-point operations required for one iteration (forward-backward) of the ML-algorithm

§ Number of required training iterations (steps and epochs) to reach the training performance/accuracy

§ Alternatively, to a) and b), the floating-point operations per second needed to run the training

§ Memory footprint of the ML algorithm (Mbit)

§ Memory footprint of the potentially required input and output data storage (Gbit)

§ Number of floating-point operations required to prepare (and format, convert) the input data in case these are not direct measurements or estimates readily available in the radio entity executing the ML-enabled function

§ Estimated number and payload (bytes) of additional signalling messages required to convey the ML-input and ML-output information between the involved radio entities (gNB and UE)

· This might be complemented by the estimated required ML-input and ML-output data rates, i.e., factoring in the acceptable transmission delays

o Inference (or exploration/exploitation for RL)

§ Number of floating point operations required for one forward pass of the ML-algorithm

§ Alternatively, to a), the floating-point operations per second needed to run the ML algorithm for (X) seconds

§ Number of floating-point operations required to prepare (and format, convert) the input data in case these are not direct measurements or estimates readily available in the radio entity executing the ML-enabled function

§ Estimated number and payload (bytes) of additional signalling messages required to convey the ML-input and ML-output information between the involved radio entities (gNB, UE)

· This might be complemented by the estimated required ML-input and ML-output data rates, i.e., factoring in the acceptable transmission delays

· Proposal 10: RAN1 to use simulator data for the study, after sufficient progress and the convergence on the solutions, evaluation with field data can be discussed.

Decision: The document is noted.

R1-2205023 General aspects of AIML framework Qualcomm Incorporated

· Proposal 15: Consider the role of model performance monitoring in relation to RAN4 tests.

Decision: The document is noted.

R1-2204416 General aspects of AI/ML framework Lenovo

· Proposal 1: A general framework for this study on AI/ML for NR air interface enhancement is needed to align the understanding on the relevant functions for future investigation.

· Proposal 2: Define and construct different data sets for different purposes, such as for model training and for model validation.

· Proposal 3: Using Option 1a or 1b, i.e., simulation data based, to construct the data set at least for model training, and the data set construction for other purposes needs to be further discussion.

· Proposal 4: The acquisition on ground-truth data for supervised learning needs to be workable in practice for any proposed AI/ML approach.

· Proposal 5: Define three categories of gNB-UE collaboration levels as listed in Table 1, according to the interacted AI/ML operation-related information.

· Proposal 6: Adopt the AI Model Characterization Card (MCC) of an AI/ML model in Table 2 as a starting point for further discussion and refinement.

· Proposal 7: Consider the KPIs/Metrics (if applicable) in Table 4 as a starting point for the common aspects of an evaluation methodology of a proposed AI/ML model for any of the agreed use cases.

Decision: The document is noted.

R1-2203067 Discussion on common AI/ML characteristics and operations FUTUREWEI

R1-2203139 Discussion on general aspects of AI/ML framework Huawei, HiSilicon

R1-2203247 Discussion on common AI/ML framework ZTE

R1-2203404 Discussions on AI-ML framework New H3C Technologies Co., Ltd.

R1-2203450 Discussion on AI/ML framework for air interface CATT

R1-2203549 General discussions on AI/ML framework vivo

R1-2203656 Discussion on general aspects of AI/ML for NR air interface China Telecom

R1-2203690 Discussion on general aspects of AI ML framework NEC

R1-2203728 Consideration on common AI/ML framework Sony

R1-2203807 Initial views on the general aspects of AI/ML framework xiaomi

R1-2203896 General aspects of AI ML framework and evaluation methodogy Samsung

R1-2204014 On general aspects of AI/ML framework OPPO

R1-2204062 Evaluating general aspects of AI-ML framework Charter Communications, Inc

R1-2204077 General aspects of AI/ML framework Panasonic

R1-2204120 Considerations on AI/ML framework SHARP Corporation

R1-2204148 General aspects on AI/ML framework LG Electronics

R1-2204179 Views on general aspects on AI-ML framework CAICT

R1-2204237 Discussion on general aspect of AI/ML framework Apple

R1-2204294 Discussion on general aspects of AI/ML framework CMCC

R1-2204374 Discussion on general aspects of AI/ML framework NTT DOCOMO, INC.

R1-2204498 Discussion on general aspects of AIML framework Spreadtrum Communications

R1-2204650 Discussion on AI/ML framework for NR air interface ETRI

R1-2204792 Discussion of AI/ML framework Intel Corporation

R1-2204839 On general aspects of AI and ML framework for NR air interface NVIDIA

R1-2204859 General aspects of AI/ML framework for NR air interface AT&T

R1-2204936 General aspects of AI/ML framework Mavenir

R1-2205065 AI/ML Model Life cycle management Rakuten Mobile

R1-2205075 Discussions on general aspects of AI/ML framework Fujitsu Limited

R1-2205099 Overview to support artificial intelligence over air interface MediaTek Inc.

[109-e-R18-AI/ML-02] Taesang (Qualcomm)

Email discussion on general aspects of AI/ML by May 20

- Check points: May 18

R1-2205285 Summary#1 of [109-e-R18-AI/ML-02] Moderator (Qualcomm)

From May 13th GTW session

Agreement

· Use 3gpp channel models (TR 38.901) as the baseline for evaluations.

· Note: Companies may submit additional results based on other dataset than generated by 3GPP channel models

R1-2205401 Summary#2 of [109-e-R18-AI/ML-02] Moderator (Qualcomm)

From May 17th GTW session

Working Assumption

Include the following into a working list of terminologies to be used for RAN1 AI/ML air interface SI discussion.

The description of the terminologies may be further refined as the study progresses.

New terminologies may be added as the study progresses.

It is FFS which subset of terminologies to capture into the TR.

|

Terminology |

Description |

|

Data collection |

A process of collecting data by the network nodes, management entity, or UE for the purpose of AI/ML model training, data analytics and inference |

|

AI/ML Model |

A data driven algorithm that applies AI/ML techniques to generate a set of outputs based on a set of inputs. |

|

AI/ML model training |

A process to train an AI/ML Model [by learning the input/output relationship] in a data driven manner and obtain the trained AI/ML Model for inference |

|

AI/ML model Inference |

A process of using a trained AI/ML model to produce a set of outputs based on a set of inputs |

|

AI/ML model validation |

A subprocess of training, to evaluate the quality of an AI/ML model using a dataset different from one used for model training, that helps selecting model parameters that generalize beyond the dataset used for model training. |

|

AI/ML model testing |

A subprocess of training, to evaluate the performance of a final AI/ML model using a dataset different from one used for model training and validation. Differently from AI/ML model validation, testing does not assume subsequent tuning of the model. |

|

UE-side (AI/ML) model |

An AI/ML Model whose inference is performed entirely at the UE |

|

Network-side (AI/ML) model |

An AI/ML Model whose inference is performed entirely at the network |

|

One-sided (AI/ML) model |

A UE-side (AI/ML) model or a Network-side (AI/ML) model |

|

Two-sided (AI/ML) model |

A paired AI/ML Model(s) over which joint inference is performed, where joint inference comprises AI/ML Inference whose inference is performed jointly across the UE and the network, i.e, the first part of inference is firstly performed by UE and then the remaining part is performed by gNB, or vice versa. |

|

AI/ML model transfer |

Delivery of an AI/ML model over the air interface, either parameters of a model structure known at the receiving end or a new model with parameters. Delivery may contain a full model or a partial model. |

|

Model download |

Model transfer from the network to UE |

|

Model upload |

Model transfer from UE to the network |

|

Federated learning / federated training |

A machine learning technique that trains an AI/ML model across multiple decentralized edge nodes (e.g., UEs, gNBs) each performing local model training using local data samples. The technique requires multiple interactions of the model, but no exchange of local data samples. |

|

Offline field data |

The data collected from field and used for offline training of the AI/ML model |

|

Online field data |

The data collected from field and used for online training of the AI/ML model |

|

Model monitoring |

A procedure that monitors the inference performance of the AI/ML model |

|

Supervised learning |

A process of training a model from input and its corresponding labels. |

|

Unsupervised learning |

A process of training a model without labelled data. |

|

Semi-supervised learning |

A process of training a model with a mix of labelled data and unlabelled data |

|

Reinforcement Learning (RL) |

A process of training an AI/ML model from input (a.k.a. state) and a feedback signal (a.k.a. reward) resulting from the models output (a.k.a. action) in an environment the model is interacting with. |

|

Model activation |

enable an AI/ML model for a specific function |

|

Model deactivation |

disable an AI/ML model for a specific function |

|

Model switching |

Deactivating a currently active AI/ML model and activating a different AI/ML model for a specific function |

Conclusion

As indicated in SID, although specific AI/ML algorithms and models may be studied for evaluation purposes, AI/ML algorithms and models are implementation specific and are not expected to be specified.

Observation

Where AI/ML functionality resides depends on specific use cases and sub-use cases.

Conclusion

· RAN1 discussion should focus on network-UE interaction.

o AI/ML functionality mapping within the network (such as gNB, LMF, or OAM) is up to RAN2/3 discussion.

R1-2205474 Summary#3 of [109-e-R18-AI/ML-02] Moderator (Qualcomm)

R1-2205522 Summary#4 of [109-e-R18-AI/ML-02] Moderator (Qualcomm)

From May 20th GTW session

Take the following network-UE collaboration levels as one aspect for defining collaboration levels

1. Level x: No collaboration

2. Level y: Signaling-based collaboration without model transfer

3. Level z: Signaling-based collaboration with model transfer

Note: Other aspect(s), for defining collaboration levels is not precluded and will be discussed in later meetings, e.g., with/without model updating, to support training/inference, for defining collaboration levels will be discussed in later meetings

FFS: Clarification is needed for Level x-y boundary

Note: Extended email discussion focusing on evaluation assumptions to take place

· Dates: May 23 24

Including evaluation methodology, KPI, and performance evaluation results.

R1-2203897 Evaluation on AI ML for CSI feedback enhancement Samsung

· Proposal 1-1: For CSI prediction, to model user mobility, consider the link-level channel model with Doppler information in Section 7.5 of TR 38.901.

· Proposal 1-2: For CSI prediction, consider Rel-16 CSI feedback and Rel-17 CSI feedback, as benchmark schemes.

· Proposal 1-3: For CSI predictions, reuse channel models in TR 38.901 to generate datasets for training/testing/validation in this sub-use case.

· Proposal 1-4: For KPIs in CSI prediction, proxy metrics such as NMSE and cosine similarity can be considered as intermediated KPIs and system-level metrics such as UPT can be used for general KPIs.

· Proposal 1-5: For CSI prediction, consider capability-related KPIs such as computational complexity, power consumption, memory storage, and hardware requirements.

· Proposal 2-1: Consider an auto-encoder as a baseline AI/ML model for CSI feedback compression and reconstruction tasks. Further study is needed to select the baseline type of neural network (e.g. CNN, RNN, LSTM).

· Proposal 2-2: For calibration in CSI compression, consider both performance-related KPIs (e.g., reconstruction accuracy) and capability-related KPIs (e.g., computational complexity) for the baseline AI/ML model.

· Proposal 2-3: Only for the model calibration in CSI compression, aligned loss function, hyper-parameter values, and details of the AI model are considered together.

· Proposal 2-4: For CSI compression, consider intermediate performance metrics (e.g., NMSE, CS) and UPT as final metric.

· Proposal 2-5: Consider various aspects of AI/ML models including computational complexity and the model size to study the AI processing burden and requirement at the UE.

· Proposal 2-6: To evaluate the capability of model generalization concerning various channel parameters (e.g., Rician K factor, path loss, angles, delays, powers, etc.)), consider datasets from mixed scenarios or different distributions of channel parameters in a single scenario.

· Proposal 3-1.: Consider a two-phased approach for evaluation. Phase I to compare various AI/ML models and their gain for representative sub-use case selection and Phase II to evaluate the gain of AI/ML schemes as compared to conventional benchmark schemes in communication systems.

· Proposal 3-2: Strive to reuse the evaluation assumptions of Rel. 16/17 codebook enhancement as much as possible with additional mobility modeling. FFS: mobility modeling, and other additional considerations to model time-correlated CSI.

· Proposal 3-3: Target moderate UE mobility, e.g., up to 30kmphr for joint CSI prediction and compression.

· Proposal 3-4: Consider either Rel-16 or Rel-17 CBs as a benchmark conventional scheme for performance comparison purposes. The selection of a benchmark conventional scheme could be based on whether angle-delay reciprocity is exploited in the channel measurement.

· Proposal 3-5: Consider an autoencoder-based AI/ML solution for joint CSI compression and prediction.

· Proposal 3-6: Consider simpler performance metrics, e.g., NMSE, cosine similarity, for Phase I of evaluation. Traditional performance metrics employed for codebook performance evaluation, such as UPT vs. feedback overhead, can be considered for Phase II.

· Proposal 3-7: Consider UE capability-related KPIs for AI/ML-based CSI compression and prediction, including computational complexity, memory storage, inference latency, model/training data transfer overhead, if applicable.

Decision: The document is noted.

R1-2203550 Evaluation on AI/ML for CSI feedback enhancement vivo

Proposal 1: The dataset for AI-model training, validation and testing can be constructed mainly based on the channel model(s) defined in TR 38.901, namely, UMi, UMa, and Indoor scenarios in system level simulation, and optionally on CDL in link level simulation.

Proposal 2: Consider both cases with same or different input data dimensions for data set construction to verify generalization performance.

Proposal 3: For CSI enhancement, the data set should be constructed in a way that data samples across different UEs, different cells, different drops, different scenarios are all included.

Proposal 4: Both the following two cases should be considered for generalization performance verification

a) Case1: the training data set is constructed by mixing data from different setup

b) Case2: training set and testing data set are from different setups

Proposal 5: For the case that the training data set is constructed by mixing data from different setup, dataset for generalization can be constructed based on the combination of different scenarios and configurations. Different ratio of data mixture can be evaluated with the same total sample number for each dataset.

Proposal 6: For AI model calibration, the parameters used to construct dataset needs to be aligned.

Proposal 7: Companies are encouraged to share the data set and model files in a public accessible way for cross check purposes. Our initial data set file for CSI compression and CSI prediction is on the following link [5] and [6].

Proposal 8: Ideal downlink channel estimation is assumed as the starting point for the performance evaluation.

Proposal 9: Use ideal UCI feedback for the performance evaluation.

Proposal 10: The evaluation assumption in Table 2 is used as the SLS assumptions for both non-AI and AI-based performance evaluations.

Proposal 11: Parameter perturbation based on the basic parameter in Table 2 can be conducted to verify generalization performance of each case.

Proposal 12: The evaluation assumption in Table 3 is used as the LLS assumptions for AI-based CSI prediction evaluations.

Proposal 13: Study the performance loss caused by the n-bits quantization of AI model parameters with the float number AI model parameters as baseline.

Proposal 14: Clarify the quantification level of the AI model for evaluation.

Proposal 15: Spectral efficiency [bits/s/Hz] can be used for the final evaluation metric while absolute or square of cosine similarity and NMSE can be used to measure the similarity and difference between input and output as an intermediate metric.

Proposal 16: Generalization performance is also used as one KPI to verify whether AI/ML can work across multiple setups.

Proposal 17: The complexity, parameter sizes, quantization, latencies and power consumption of models needs to be considered.

Proposal 18: The impact of the type of historical CSI inputs should be studied for the AI-based CSI prediction.

Proposal 19: The choice of number of historical CSI inputs should be studied for the AI-based CSI prediction.

Proposal 20: The study on the prediction of multiple future CSIs is with high priority.

Proposal 21: The generalization performance across frequency domain should be studied.

Proposal 22: The generalization capability with respect to scenarios should be studied.

Proposal 23: Finetuning of AI-based CSI prediction should be studied.

Decision: The document is noted.

R1-2203650 Evaluation on AI-based CSI feedback SEU

R1-2204041 Considerations on AI-enabled CSI overhead reduction CENC

R1-2204606 Discussion on the AI/ML methods for CSI feedback enhancements Fraunhofer IIS, Fraunhofer HHI

R1-2203068 Discussion on evaluation of AI/ML for CSI feedback enhancement use case FUTUREWEI

R1-2203140 Evaluation on AI/ML for CSI feedback enhancement Huawei, HiSilicon

R1-2203248 Evaluation assumptions on AI/ML for CSI feedback ZTE

R1-2203281 Evaluations on AI-CSI Ericsson

R1-2203451 Discussion on evaluation on AI/ML for CSI feedback CATT

R1-2203808 Discussion on evaluation on AI/ML for CSI feedback enhancement xiaomi

R1-2204015 Evaluation methodology and preliminary results on AI/ML for CSI feedback enhancement OPPO

R1-2204050 Evaluation on AI/ML for CSI feedback enhancement InterDigital, Inc.

R1-2204055 Evaluation of CSI compression with AI/ML Beijing Jiaotong University

R1-2204063 Performance evaluation of ML techniques for CSI feedback enhancement Charter Communications, Inc

R1-2204149 Evaluation on AI/ML for CSI feedback enhancement LG Electronics

R1-2204180 Some discussions on evaluation on AI-ML for CSI feedback CAICT

R1-2204238 Initial evaluation on AI/ML for CSI feedback Apple

R1-2204295 Discussion on evaluation on AI/ML for CSI feedback enhancement CMCC

R1-2204375 Discussion on evaluation on AI/ML for CSI feedback enhancement NTT DOCOMO, INC.

R1-2204417 Evaluation on AI/ML for CSI feedback Lenovo

R1-2204499 Discussion on evaluation on AI/ML for CSI feedback enhancement Spreadtrum Communications, BUPT

R1-2204571 Evaluation on ML for CSI feedback enhancement Nokia, Nokia Shanghai Bell

R1-2204793 Evaluation for CSI feedback enhancements Intel Corporation

R1-2204840 On evaluation assumptions of AI and ML for CSI feedback enhancement NVIDIA

R1-2204860 Evaluation of AI/ML for CSI feedback enhancements AT&T

R1-2205024 Evaluation on AIML for CSI feedback enhancement Qualcomm Incorporated

R1-2205076 Evaluation on AI/ML for CSI feedback enhancement Fujitsu Limited

R1-2205100 Evaluation on AI/ML for CSI feedback enhancement MediaTek Inc.

[109-e-R18-AI/ML-03] Yuan (Huawei)

Email discussion on evaluation of AI/ML for CSI feedback enhancement by May 20

- Check points: May 18

R1-2205222 Summary#1 of [109-e-R18-AI/ML-03] Moderator (Huawei)

R1-2205223 Summary#2 of [109-e-R18-AI/ML-03] Moderator (Huawei)

From May 13th GTW session

Agreement

For the performance evaluation of the AI/ML based CSI feedback enhancement, system level simulation approach is adopted as baseline

· Link level simulation is optionally adopted

R1-2205224 Summary#3 of [109-e-R18-AI/ML-03] Moderator (Huawei)

Decision: As per email decision posted on May 19th,

Agreement

For the evaluation of the AI/ML based CSI feedback enhancement, for the calibration purpose on the dataset and/or AI/ML model over companies, consider to align the parameters (e.g., for scenarios/channels) for generating the dataset in the simulation as a starting point.

Decision: As per email decision posted on May 20th,

Agreement

For the evaluation of the AI/ML based CSI feedback enhancement, for Channel estimation, ideal DL channel estimation is optionally taken into the baseline of EVM for the purpose of calibration and/or comparing intermediate results (e.g., accuracy of AI/ML output CSI, etc.)

· Note: Eventual performance comparison with the benchmark release and drawing SI conclusions should be based on realistic DL channel estimation.

· FFS: the ideal channel estimation is applied for dataset construction, or performance evaluation/inference.

· FFS: How to model the realistic channel estimation

· FFS: Whether ideal channel is used as target CSI for intermediate results calculation with AI/ML output CSI from realistic channel estimation

Agreement

For the evaluation of the AI/ML based CSI feedback enhancement, companies can consider performing intermediate evaluation on AI/ML model performance to derive the intermediate KPI(s) (e.g., accuracy of AI/ML output CSI) for the purpose of AI/ML solution comparison.

Agreement

For the evaluation of the AI/ML based CSI feedback enhancement, Floating point operations (FLOPs) is adopted as part of the Evaluation Metric, and reported by companies.

Agreement

For the evaluation of the AI/ML based CSI feedback enhancement, AI/ML memory storage in terms of AI/ML model size and number of AI/ML parameters is adopted as part of the Evaluation Metric, and reported by companies who may select either or both.

· FFS: the format of the AI/ML parameters

Agreement

For the evaluation of the AI/ML based CSI compression sub use cases, a two-sided model is considered as a starting point, including an AI/ML-based CSI generation part to generate the CSI feedback information and an AI/ML-based CSI reconstruction part which is used to reconstruct the CSI from the received CSI feedback information.

· At least for inference, the CSI generation part is located at the UE side, and the CSI reconstruction part is located at the gNB side.

Agreement

For the evaluation of the AI/ML based CSI feedback enhancement, if SLS is adopted, the following table is taken as a baseline of EVM

· Note: the following table captures the common parts of the R16 CSI enhancement EVM table and the R17 CSI enhancement EVM table, while the different parts are FFS.

· Note: the baseline EVM is used to compare the performance with the benchmark release, while the AI/ML related parameters (e.g., dataset construction, generalization verification, and AI/ML related metrics) can be of additional/different assumptions.

o The conclusions for the use cases in the SI should be drawn based on generalization verification over potentially multiple scenarios/configurations.

· FFS: modifications on top of the following table for the purpose of AI/ML related evaluations.

|

Parameter |

Value |

|

|

Duplex, Waveform |

FDD (TDD is not precluded), OFDM |

|

|

Multiple access |

OFDMA |

|

|

Scenario |

Dense Urban (Macro only) is a baseline. Other scenarios (e.g. UMi@4GHz 2GHz, Urban Macro) are not precluded. |

|

|

Frequency Range |

FR1 only, FFS 2GHz or 4GHz as a baseline |

|

|

Inter-BS distance |

200m |

|

|

Channel model |

According to TR 38.901 |

|

|

Antenna setup and port layouts at gNB |

Companies need to report which option(s) are used between - 32 ports: (8,8,2,1,1,2,8), (dH,dV) = (0.5, 0.8)λ - 16 ports: (8,4,2,1,1,2,4), (dH,dV) = (0.5, 0.8)λ Other configurations are not precluded. |

|

|

Antenna setup and port layouts at UE |

4RX: (1,2,2,1,1,1,2), (dH,dV) = (0.5, 0.5)λ for (rank 1-4) 2RX: (1,1,2,1,1,1,1), (dH,dV) = (0.5, 0.5)λ for (rank 1,2) Other configuration is not precluded. |

|

|

BS Tx power |

41 dBm for 10MHz, 44dBm for 20MHz, 47dBm for 40MHz |

|

|

BS antenna height |

25m |

|

|

UE antenna height & gain |

Follow TR36.873 |

|

|

UE receiver noise figure |

9dB |

|

|

Modulation |

Up to 256QAM |

|

|

Coding on PDSCH |

LDPC Max code-block size=8448bit |

|

|

Numerology |

Slot/non-slot |

14 OFDM symbol slot |

|

SCS |

15kHz for 2GHz, 30kHz for 4GHz |

|

|

Simulation bandwidth |

FFS |

|

|

Frame structure |

Slot Format 0 (all downlink) for all slots |

|

|

MIMO scheme |

FFS |

|

|

MIMO layers |

For all evaluation, companies to provide the assumption on the maximum MU layers (e.g. 8 or 12) |

|

|

CSI feedback |

Feedback assumption at least for baseline scheme

|

|

|

Overhead |

Companies shall provide the downlink overhead assumption (i.e., whether the CSI-RS transmission is UE-specific or not and take that into account for overhead computation) |

|

|

Traffic model |

FFS |

|

|

Traffic load (Resource utilization) |

FFS |

|

|

UE distribution |

- 80% indoor (3km/h), 20% outdoor (30km/h) FFS whether/what other indoor/outdoor distribution and/or UE speeds for outdoor UEs needed |

|

|

UE receiver |

MMSE-IRC as the baseline receiver |

|

|

Feedback assumption |

Realistic |

|

|

Channel estimation |

Realistic as a baseline FFS ideal channel estimation |

|

|

Evaluation Metric |

Throughput and CSI feedback overhead as baseline metrics. Additional metrics, e.g., ratio between throughput and CSI feedback overhead, can be used. Maximum overhead (payload size for CSI feedback)for each rank at one feedback instance is the baseline metric for CSI feedback overhead, and companies can provide other metrics. |

|

|

Baseline for performance evaluation |

FFS |

|

R1-2205491 Summary#4 of [109-e-R18-AI/ML-03] Moderator (Huawei)

Decision: As per email decision posted on May 22nd,

Agreement

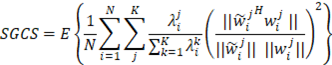

For the evaluation of the AI/ML based CSI feedback enhancement, as a starting point, take the intermediate KPIs of GCS/SGCS and/or NMSE as part of the Evaluation Metric to evaluate the accuracy of the AI/ML output CSI

· For GCS/SGCS,

o FFS: how to calculate GCS/SGCS for rank>1

o FFS: whether GCS or SGCS is adopted

· FFS other metrics, e.g., equivalent MSE, received SNR, or numerical spectral efficiency gap.

Agreement

For the evaluation of the AI/ML based CSI feedback enhancement, if LLS is preferred, the following table is taken as a baseline of EVM

· Note: the baseline EVM is used to compare the performance with the benchmark release, while the AI/ML related parameters (e.g., dataset construction, generalization verification, and AI/ML related metrics) can be of additional/different assumptions.

o The conclusions for the use cases in the SI should be drawn based on generalization verification over potentially multiple scenarios/configurations.

· FFS: modifications on top of the following table for the purpose of AI/ML related evaluations.

· FFS: other parameters and values if needed

|

Parameter |

Value |

|

Duplex, Waveform |

FDD (TDD is not precluded), OFDM |

|

Carrier frequency |

2GHz as baseline, optional for 4GHz |

|

Bandwidth |

10MHz or 20MHz |

|

Subcarrier spacing |

15kHz for 2GHz, 30kHz for 4GHz |

|

Nt |

32: (8,8,2,1,1,2,8), (dH,dV) = (0.5, 0.8)λ |

|

Nr |

4: (1,2,2,1,1,1,2), (dH,dV) = (0.5, 0.5)λ |

|

Channel model |

CDL-C as baseline, CDL-A as optional |

|

UE speed |

3kmhr, 10km/h, 20km/h or 30km/h to be reported by companies |

|

Delay spread |

30ns or 300ns |

|

Channel estimation |

Realistic channel estimation algorithms (e.g. LS or MMSE) as a baseline, FFS ideal channel estimation |

|

Rank per UE |

Rank 1-4. Companies are encouraged to report the Rank number, and whether/how rank adaptation is applied |

Agreement (modified by May 23rd post)

For the evaluation of the AI/ML based CSI feedback enhancement, study the verification of generalization. Companies are encouraged to report how they verify the generalization of the AI/ML model, including:

· The training dataset of configuration(s)/ scenario(s), including potentially the mixed training dataset from multiple configurations/scenarios

· The configuration(s)/ scenario(s) for testing/inference

· The detailed list of configuration(s) and/or scenario(s)

· Other details are not precluded

Note: Above agreement is updated as follows

Agreement

For the evaluation of the AI/ML based CSI feedback enhancement, study the verification of generalization. Companies are encouraged to report how they verify the generalization of the AI/ML model, including:

· The configuration(s)/ scenario(s) for training dataset, including potentially the mixed training dataset from multiple configurations/scenarios

· The configuration(s)/ scenario(s) for testing/inference

· Other details are not precluded

Agreement

For the evaluation of the AI/ML based CSI compression sub use cases, companies are encouraged to report the details of their models, including:

· The structure of the AI/ML model, e.g., type (CNN, RNN, Transformer, Inception, ), the number of layers, branches, real valued or complex valued parameters, etc.

· The input CSI type, e.g., raw channel matrix estimated by UE, eigenvector(s) of the raw channel matrix estimated by UE, etc.

o FFS: the input CSI is obtained from the channel with or without analog BF

· The output CSI type, e.g., channel matrix, eigenvector(s), etc.

· Data pre-processing/post-processing

· Loss function

· Others are not precluded

Agreement

For the evaluation of the AI/ML based CSI feedback enhancement, if SLS is adopted, the following parameters are taken into the baseline of EVM

· Note: The 2nd column applies if R16 TypeII codebook is selected as baseline, and the 3rd column applies if R17 TypeII codebook is selected as baseline.

o Additional assumptions from R17 TypeII EVM Same consideration with respect to utilizing angle-delay reciprocity should be considered taken for the AI/ML based CSI feedback and the baseline scheme if R17 TypeII codebook is selected as baseline

o FFS baseline for potential sub use cases involving CSI enhancement on time domain

· Note: the baseline EVM is used to compare the performance with the benchmark release, while the AI/ML related parameters (e.g., dataset construction, generalization verification, and AI/ML related metrics) can be of additional/different assumptions.

o The conclusions for the use cases in the SI should be drawn based on generalization verification over potentially multiple scenarios/configurations.

· FFS: modifications on top of the following table for the purpose of AI/ML related evaluations.

|

Parameter |

Value (if R16 as baseline) |

Value (if R17 as baseline) |

|

Frequency Range |

FR1 only, 2GHz as baseline, optional for 4GHz. |

FR1 only, 2GHz with duplexing gap of 200MHz between DL and UL, optional for 4GHz |

|

Simulation bandwidth |

10 MHz for 15kHz as a baseline, and configurations which emulate larger BW, e.g., same sub-band size as 40/100 MHz with 30kHz, may be optionally considered. Above 15kHz is replaced with 30kHz SCS for 4GHz. |

20 MHz for 15kHz as a baseline (optional for 10 MHz with 15KHz), and configurations which emulate larger BW, e.g., same sub-band size as 40/100 MHz with 30kHz, may be optionally considered. Above 15kHz is replaced with 30kHz SCS for 4GHz |

|

MIMO scheme |

SU/MU-MIMO with rank adaptation. Companies are encouraged to report the SU/MU-MIMO with RU |

SU/MU-MIMO with rank adaptation. Companies are encouraged to report the SU/MU-MIMO with RU |

|

Traffic load (Resource utilization) |

20/50/70% Companies are encouraged to report the MU-MIMO utilization. |

20/50/70% Companies are encouraged to report the MU-MIMO utilization. |

Decision: As per email decision posted on May 25th,

Agreement

For the evaluation of the AI/ML based CSI feedback enhancement, if SLS is adopted, the Baseline for performance evaluation in the baseline of EVM is captured as follows

|

Baseline for performance evaluation |

Companies need to report which option is used between - Rel-16 TypeII Codebook as the baseline for performance and overhead evaluation. - Rel-17 TypeII Codebook as the baseline for performance and overhead evaluation. - FFS: Whether Type I Codebook can be optionally considered at least for performance evaluation |

Agreement

For the evaluation of the AI/ML based CSI feedback enhancement, if the GCS/SGCS is adopted as the intermediate KPI as part of the Evaluation Metric for rank>1 cases, companies to report the GCS/SGCS calculation/extension methods, including:

· Method 1: Average over all layers

o

Note: ![]() is the

is the ![]() eigenvector of the target CSI at resource unit i and K is

the rank.

eigenvector of the target CSI at resource unit i and K is

the rank. ![]() is the

is the ![]() output vector of the output CSI of resource unit i.

output vector of the output CSI of resource unit i. ![]() is the total number of resource units.

is the total number of resource units. ![]() denotes the average operation over multiple samples.

denotes the average operation over multiple samples.

· Method 2: Weighted average over all layers

o Note: Companies to report the formula (e.g., whether normalization is applied for eigenvalues)

· Method 3: GCS/SGCS is separately calculated for each layer (e.g., for K layers, K GCS/SGCS values are derived respectively, and comparison is performed per layer)

· Other methods are not precluded

· FFS: Further down-selection among the above options or take one/a subset of the above methods as baseline(s).

Final summary in R1-2205492.

Including finalization of representative sub use cases (by RAN1#111) and discussions on potential specification impact.

R1-2203069 Discussion on sub use cases of AI/ML for CSI feedback enhancement use case FUTUREWEI

R1-2203141 Discussion on AI/ML for CSI feedback enhancement Huawei, HiSilicon

R1-2203249 Discussion on potential enhancements for AI/ML based CSI feedback ZTE

R1-2203282 Discussions on AI-CSI Ericsson

R1-2203452 Discussion on other aspects on AI/ML for CSI feedback CATT

R1-2203551 Other aspects on AI/ML for CSI feedback enhancement vivo

R1-2203614 Discussion on AI/ML for CSI feedback enhancement GDCNI (Late submission)

R1-2203729 Considerations on CSI measurement enhancements via AI/ML Sony

R1-2203809 Discussion on AI for CSI feedback enhancement xiaomi

R1-2203898 Representative sub use cases for CSI feedback enhancement Samsung

R1-2203939 Discussion on AI/ML for CSI feedback enhancement NEC

R1-2204016 On sub use cases and other aspects of AI/ML for CSI feedback enhancement OPPO

R1-2204051 Discussion on AI/ML for CSI feedback enhancement InterDigital, Inc.

R1-2204057 CSI compression with AI/ML Beijing Jiaotong University

R1-2204150 Other aspects on AI/ML for CSI feedback enhancement LG Electronics

R1-2204181 Discussions on AI-ML for CSI feedback CAICT

R1-2204239 Discussion on other aspects on AI/ML for CSI feedback Apple

R1-2204296 Discussion on other aspects on AI/ML for CSI feedback enhancement CMCC

R1-2204376 Discussion on other aspects on AI/ML for CSI feedback enhancement NTT DOCOMO, INC.

R1-2204418 Further aspects of AI/ML for CSI feedback Lenovo

R1-2204500 Discussion on other aspects on AI/ML for CSI feedback Spreadtrum Communications

R1-2204568 Discussions on Sub-Use Cases in AI/ML for CSI Feedback Enhancement TCL Communication

R1-2204572 Other aspects on ML for CSI feedback enhancement Nokia, Nokia Shanghai Bell

R1-2204659 Discussion on AI/ML for CSI feedback enhancement Panasonic

R1-2204794 Use-cases and specification for CSI feedback Intel Corporation

R1-2204841 On other aspects of AI and ML for CSI feedback enhancement NVIDIA

R1-2204861 CSI feedback enhancements for AI/ML based MU-MIMO scheduling and parameter configuration AT&T

R1-2204937 AI/ML for CSI feedback enhancement Mavenir

R1-2205025 Other aspects on AIML for CSI feedback enhancement Qualcomm Incorporated

R1-2205077 Views on sub-use case selection and STD impacts on AI/ML for CSI feedback enhancement Fujitsu Limited

R1-2205101 On the challenges of collecting field data for training and testing of AI/ML for CSI feedback enhancement MediaTek Inc.

[109-e-R18-AI/ML-04] Huaning (Apple)

Email discussion on other aspects of AI/ML for CSI feedback enhancement by May 20

- Check points: May 18

R1-2205467 Email discussion on other aspects of AI/ML for CSI enhancement Moderator (Apple)

Decision: As per email decision posted on May 20th,

Agreement

Spatial-frequency domain CSI compression using two-sided AI model is selected as one representative sub use case.

· Note: Study of other sub use cases is not precluded.

· Note: All pre-processing/post-processing, quantization/de-quantization are within the scope of the sub use case.

Conclusion

· Further discuss temporal-spatial-frequency domain CSI compression using two-sided model as a possible sub-use case for CSI feedback enhancement after evaluation methodology discussion.

· Further discuss improving the CSI accuracy based on traditional codebook design using one-sided model as a possible sub-use case for CSI feedback enhancement after evaluation methodology discussion.

· Further discuss CSI prediction using one-sided model as a possible sub-use case for CSI feedback enhancement after evaluation methodology discussion

· Further discuss CSI-RS configuration and overhead reduction as a possible sub-use case for CSI feedback enhancement after evaluation methodology discussion

· Further discuss resource allocation and scheduling as a possible sub-use case for CSI feedback enhancement after evaluation methodology discussion

· Further discuss joint CSI prediction and compression as a possible sub-use case for CSI feedback enhancement after evaluation methodology discussion.

Final summary in R1-2205556.

Including evaluation methodology, KPI, and performance evaluation results.

R1-2204377 Discussion on evaluation on AI/ML for beam management NTT DOCOMO, INC.

· Proposal 1: Time-domain beam prediction should be studied as a sub use-case of beam management in Rel-18 AI/ML for AI.

· Proposal 2: 3GPP statistical channel models are considered in the evaluation for representative sub use-case selection.

· Proposal 3: Discuss and decide whether and which deterministic channel models should be used to capture the final evaluation results of selected sub use-cases.

· Proposal 4: Spatial-domain beam estimation should be studied as a sub use-case of beam management in Rel-18 AI/ML for AI.

Decision: The document is noted.

R1-2203250 Evaluation assumptions on AI/ML for beam management ZTE

Proposal 1: Due to stronger computing power and comprehensive awareness of the surrounding environment, AI inference is performed on the gNB side to ensure high prediction accuracy and low processing delay.

Proposal 2: Top-K candidate beams with higher predicted RSRP can be filtered out for refined small-range beam sweeping, resulting in a relatively good trade-off between training overhead and performance.

Proposal 3: Deep neutral network is exploited for the spatial-domain beam prediction due to its excellent ability on classification tasks and learning complex nonlinear relationships.

Proposal 4: AI/ML based spatial-domain beam prediction can significantly reduce the beam training overhead by avoiding exhaustive beam sweeping.

Proposal 5: Beam prediction accuracy can be used as the performance indicators at the early stage, which may include top-1/top-K beam prediction accuracy, average RSRP difference, and CDFs of RSRP difference between the AI-predicted beam and ideal beam.

Proposal 6: Since the data sets and AI models used by different companies are different, it is necessary to provide common data sets and baseline models for simulation calibration and performance cross-validation.

Proposal 7: AI/ML based solutions are expected to be studied and evaluated to do beam prediction so as to reduce beam tracking latency and RS overhead in high mobility scenarios.

Proposal 8: Consider predictable mobility for beam management as an enhancement aspect for improving UE experience in FR2 high mobility scenario (e.g., high-speed train and high-way).

- Study and evaluate the feasibility and potential system level gain on predictable mobility for beam management based on the identified scenario(s).

Decision: The document is noted.

R1-2203142 Evaluation on AI/ML for beam management Huawei, HiSilicon

R1-2203255 Model and data-driven beam predictions in high-speed railway scenarios PML

R1-2203283 Evaluations on AI-BM Ericsson

R1-2203374 Discussion for evaluation on AI/ML for beam management InterDigital, Inc.

R1-2203453 Discussion on evaluation on AI/ML for beam management CATT

R1-2203552 Evaluation on AI/ML for beam management vivo

R1-2203810 Evaluation on AI/ML for beam management xiaomi

R1-2203899 Evaluation on AI ML for Beam management Samsung

R1-2204017 Evaluation methodology and preliminary results on AI/ML for beam management OPPO

R1-2204059 Evaluation methodology of beam management with AI/ML Beijing Jiaotong University

R1-2204102 Discussion on evaluation of AI/ML for beam management use case FUTUREWEI

R1-2204151 Evaluation on AI/ML for beam management LG Electronics

R1-2204182 Some discussions on evaluation on AI-ML for Beam management CAICT

R1-2204240 Evaluation on AI based Beam Management Apple

R1-2204297 Discussion on evaluation on AI/ML for beam management CMCC

R1-2204419 Evaluation on AI/ML for beam management Lenovo

R1-2204573 Evaluation on ML for beam management Nokia, Nokia Shanghai Bell

R1-2204795 Evaluation for beam management Intel Corporation

R1-2204842 On evaluation assumptions of AI and ML for beam management NVIDIA

R1-2204862 Evaluation methodology aspects on AI/ML for beam management AT&T

R1-2205026 Evaluation on AIML for beam management Qualcomm Incorporated

R1-2205078 Evaluation on AI/ML for beam management Fujitsu Limited

R1-2205102 AI-assisted Target Cell Prediction for Inter-cell Beam Management MediaTek Inc.

[109-e-R18-AI/ML-05] Feifei (Samsung)

Email discussion on evaluation of AI/ML for beam management by May 20

- Check points: May 18

R1-2205269 Feature lead summary #1 evaluation of AI/ML for beam management Moderator (Samsung)

From May 17th GTW session

Agreement

· For dataset construction and performance evaluation (if applicable) for the AI/ML in beam management, system level simulation approach is adopted as baseline

o Link level simulation is optionally adopted

Agreement

· At least for temporal beam prediction, companies report the one of spatial consistency procedures:

o Procedure A in TR38.901

o Procedure B in TR38.901

Agreement

· At least for temporal beam prediction, Dense Urban (macro-layer only, TR 38.913) is the basic scenario for dataset generation and performance evaluation.

o Other scenarios are not precluded.

· For spatial-domain beam prediction, Dense Urban (macro-layer only, TR 38.913) is the basic scenario for dataset generation and performance evaluation.

o Other scenarios are not precluded.

Agreement

· At least for spatial-domain beam prediction in initial phase of the evaluation, UE trajectory model is not necessarily to be defined.

Agreement

· At least for temporal beam prediction in initial phase of the evaluation, UE trajectory model is defined. FFS on the details.

R1-2205270 Feature lead summary #2 evaluation of AI/ML for beam management Moderator (Samsung)

R1-2205271 Feature lead summary #3 evaluation of AI/ML for beam management Moderator (Samsung)

Decision: As per email decision posted on May 20th,

Agreement

· UE rotation speed is reported by companies.

o Note: UE rotation speed = 0, i.e., no UE rotation, is not precluded.

Agreement

· For AI/ML in beam management evaluation, RAN1 does not attempt to define any common AI/ML model as a baseline.

Conclusion

Further study AI/ML model generalization in beam management evaluating the inference performance of beam prediction under multiple different scenarios/configurations.

· FFS on different scenarios/configurations

· Companies report the training approach, at least including the dataset assumption for training

Agreement

· For evaluation of AI/ML in BM, the KPI may include the model complexity and computational complexity.

o FFS: the details of model complexity and computational complexity

Agreement

· For spatial-domain beam prediction, further study the following options as baseline performance

o Option 1: Select the best beam within Set A of beams based on the measurement of all RS resources or all possible beams of beam Set A (exhaustive beam sweeping)

§ FFS CSI-RS/SSB as the RS resources

o Option 2: Select the best beam within Set A of beams based on the measurement of RS resources from Set B of beams

§ FFS: Set B is a subset of Set A and/or Set A consists of narrow beams and Set B consists of wide beams

§ FFS: how conventional scheme to obtain performance KPIs

§ FFS: how to determine the subset of RS resources is reported by companies

o Other options are not precluded.

Decision: As per email decision posted on May 22nd,

Agreement

· For dataset generation and performance evaluation for AI/ML in beam management, take the parameters (if applicable) in Table 1.2-1b for Dense Urban scenario for SLS

Table 1.2-1b Assumptions for Dense Urban scenario for AI/ML in beam management

|

Parameters |

Values |

|

Frequency Range |

FR2 @ 30 GHz · SCS: 120 kHz |

|

Deployment |

200m ISD, · 2-tier model with wrap-around (7 sites, 3 sectors/cells per site) Other deployment assumption is not precluded |

|

Channel mode |

UMa with distance-dependent LoS probability function defined in Table 7.4.2-1 in TR 38.901. |

|

System BW |

80MHz |

|

UE Speed |

· For spatial domain beam prediction, 3km/h · For time domain beam prediction: 30km/h (baseline), 60km/h (optional) · Other values are not precluded |

|

UE distribution |

· FFS UEs per sector/cell for evaluation. More UEs per sector/cell for data generation is not precluded. · For spatial domain beam prediction: FFS: o Option 1: 80% indoor ,20% outdoor as in TR 38.901 o Option 2: 100% outdoor · For time domain prediction: 100% outdoor |

|

Transmission Power |

Maximum Power and Maximum EIRP for base station and UE as given by corresponding scenario in 38.802 (Table A.2.1-1 and Table A.2.1-2) |

|

BS Antenna Configuration |

· [One panel: (M, N, P, Mg, Ng) = (4, 8, 2, 1, 1), (dV, dH) = (0.5, 0.5) λ as baseline] · [Four panels: (M, N, P, Mg, Ng) = (4, 8, 2, 2, 2), (dV, dH) = (0.5, 0.5) λ. (dg,V, dg,H) = (2.0, 4.0) λ as optional] · Other assumptions are not precluded.

Companies to explain TXRU weights mapping. Companies to explain beam selection. Companies to explain number of BS beams |

|

BS Antenna radiation pattern |

TR 38.802 Table A.2.1-6, Table A.2.1-7 |

|

UE Antenna Configuration |

[Panel structure: (M,N,P) = (1,4,2)] · 2 panels (left, right) with (Mg, Ng) = (1, 2) as baseline · Other assumptions are not precluded

Companies to explain TXRU weights mapping. Companies to explain beam and panel selection. Companies to explain number of UE beams |

|

UE Antenna radiation pattern |

TR 38.802 Table A.2.1-8, Table A.2.1-10 |

|

Beam correspondence |

Companies to explain beam correspondence assumptions (in accordance to the two types agreed in RAN4) |

|

Link adaptation |

Based on CSI-RS |

|

Traffic Model |

FFS: · Option 1: Full buffer · Option 2: FTP model Other options are not precluded |

|

Inter-panel calibration for UE |

Ideal, non-ideal following 38.802 (optional) Explain any errors |

|

Control and RS overhead |

Companies report details of the assumptions |

|

Control channel decoding |

Ideal or Non-ideal (Companies explain how it is modelled) |

|

UE receiver type |

MMSE-IRC as the baseline, other advanced receiver is not precluded |

|

BF scheme |

Companies explain what scheme is used |

|

Transmission scheme |

Multi-antenna port transmission schemes Note: Companies explain details of the using transmission scheme. |

|

Other simulation assumptions |

Companies to explain serving TRP selection Companies to explain scheduling algorithm |

|

Other potential impairments |

Not modelled (assumed ideal). If impairments are included, companies will report the details of the assumed impairments |

|

BS Tx Power |

[40 dBm] |

|

Maximum UE Tx Power |

23 dBm |

|

BS receiver Noise Figure |

7 dB |

|

UE receiver Noise Figure |

10 dB |

|

Inter site distance |

200m |

|

BS Antenna height |

25m |

|

UE Antenna height |

1.5 m |

|

Car penetration Loss |

38.901, sec 7.4.3.2: μ = 9 dB, σp = 5 dB |

Agreement

· For temporal beam prediction, the following options can be considered as a starting point for UE trajectory model for further study. Companies report further changes or modifications based on the following options for UE trajectory model. Other options are not precluded.

o Option #2: Linear trajectory model with random direction change.

§

UE moving trajectory: UE

will move straightly along the selected direction to the end of an time interval, where the length of the time

interval is provided by using an exponential distribution with average interval

length, e.g., 5s, with granularity of 100 ms.

· UE moving direction change: At the end of the time interval, UE will change the moving direction with the angle difference A_diff from the beginning of the time interval, provided by using a uniform distribution within [-45°, 45°].

· UE move straightly within the time interval with the fixed speed.

o Option #3: Linear trajectory model with random and smooth direction change.

§ UE

moving trajectory: UE will change the moving direction by multiple steps within

an time internal, where the length of

the time interval is provided by using an exponential distribution with average

interval length, e.g., 5s, with granularity of 100 ms.

· UE moving direction change: At the end of the time interval, UE will change the moving direction with the angle difference A_diff from the beginning of the time interval, provided by using a uniform distribution within [-45°, 45°].

· The time interval is further broken into N sub-intervals, e.g. 100ms per sub-interval, and at the end of each sub-interval, UE change the direction by the angle of A_diff/N.

· UE move straightly within the time sub-interval with the fixed speed.

o Option #4: Random direction straight-line trajectories.

§ Initial UE location, moving direction and speed: UE is randomly dropped in a cell, and an initial moving direction is randomly selected, with a fixed speed.

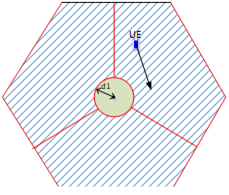

· The initial UE location should be randomly drop within the following blue area

where d1 is the minimum distance that UE should be away from the BS.

o Each sector is a cell and that the cell association is geometry based.

o During the simulation, inter-cell handover or switching should be disabled.

For training data generation

§ For each UE moving trajectory: the total length of the UE trajectory can be set as T second if it is in time, of set as D meter if it is in distance.

· The value of T (or D) can be further discussed

· The trajectory sampling interval granularity depends on UE speed and it can be further discussed.

§ UE can move straightly along the entire trajectory, or

§

UE can move straightly

during the time interval, where the time interval is provided by using an

exponential distribution with average interval length ![]()

· UE may change the moving direction at the end of the time interval. UE will change the moving direction with the angle difference A_diff from the beginning of the time interval, provided by using a uniform distribution within [-45°, 45°]

§ If the UE trajectory hit the cell boundary (the red line), the trajectory should be terminated.

· If the trajectory length (in time) is less than the length of observation window + prediction window, the trajectory should be discarded.

· At the current stage, the length of observation window + prediction window is not fixed and the companies can report their values.

· Generalization issue is FFS

Agreement

· For temporal beam prediction, further study the following options as baseline performance

o Option 1a: Select the best beam for T2 within Set A of beams based on the measurements of all the RS resources or all possible beams from Set A of beams at the time instants within T2

o Option 2: Select the best beam for T2 within Set A of beams based on the measurements of all the RS resources from Set B of beams at the time instants within T1

§ Companies explain the detail on how to select the best beam for T2 from Set A based on the measurements in T1

o Where T2 is the time duration for the best beam selection, and T1 is a time duration to obtain the measurements of all the RS resource from Set B of beams.

§ T1 and T2 are aligned with those for AI/ML based methods

o Whether Set A and Set B are the same or different depend on the sub-use case

o Other options are not precluded.

Agreement

· For dataset generation and performance evaluation for AI/ML in beam management, take the following assumption for LLS as optional methodology

|

Parameter |

Value |

|

Frequency |

30GHz. |

|

Subcarrier spacing |

120kHz |

|

Data allocation |

[8 RBs] as baseline, companies can report larger number of RBs First 2 OFDM symbols for PDCCH, and following 12 OFDM symbols for data channel |

|

PDCCH decoding |

Ideal or Non-ideal (Companies explain how is oppler) |

|

Channel model |

FFS: LOS channel: CDL-D extension, DS = 100ns NLOS channel: CDL-A/B/C extension, DS = 100ns Companies explains details of extension methodology considering spatial consistency

Other channel models are not precluded. |

|

BS antenna configurations |

· One panel: (M, N, P, Mg, Ng) = (4, 8, 2, 1, 1), (dV, dH) = (0.5, 0.5) λ as baseline · Other assumptions are not precluded.

Companies to explain TXRU weights mapping. Companies to explain beam selection. Companies to explain number of BS beams |

|

BS antenna element radiation pattern |

Same as SLS |

|

BS antenna height and antenna array downtile angle |

25m, 110° |

|

UE antenna configurations |

Panel structure: (M, N, P) = (1, 4, 2), · 2 panels (left, right) with (Mg, Ng) = (1, 2) as baseline · 1 panel as optional · Other assumptions are not precluded

Companies to explain TXRU weights mapping. Companies to explain beam and panel selection. Companies to explain number of UE beams |

|

UE antenna element radiation pattern |

Same as SLS |

|

UE moving speed |

Same as SLS |

|

Raw data collection format |

Depends on sub-use case and companies choice. |

Decision: As per email decision posted on May 25th,

Agreement

· For UE trajectory model, UE orientation can be independent from UE moving trajectory model. FFS on the details.

o Other UE orientation model is not precluded.

Agreement

· Companies are encouraged to report the following aspects of AI/ML model in RAN 1 #110. FFS on whether some of aspects need be defined or reported.

o Description of AI/ML model, e.g, NN architecture type

o Model inputs/outputs (per sub-use case)

o Training methodology, e.g.

§ Loss function/optimization function

§ Training/ validity /testing dataset:

· Dataset size, number of training/ validity /test samples

· Model validity area: e.g., whether model is trained for single sector or multiple sectors

· Details on Model monitoring and model update, if applicable

o Others related aspects are not precluded

Agreement

· To evaluate the performance of AI/ML in beam management, further study the following KPI options:

o Beam prediction accuracy related KPIs, may include the following options:

§ Average L1-RSRP difference of Top-1 predicted beam

§ Beam prediction accuracy (%) for Top-1 and/or Top-K beams, FFS the definition:

· Option 1: The beam prediction accuracy (%) is the percentage of the Top-1 predicted beam is one of the Top-K genie-aided beams

· Option 2: The beam prediction accuracy (%) is the percentage of the Top-1 genie-aided beam is one of the Top-K predicted beams

§ CDF of L1-RSRP difference for Top-1 predicted beam

§ Beam prediction accuracy (%) with 1dB margin for Top-1 beam

· The beam prediction accuracy (%) with 1dB margin is the percentage of the Top-1 predicted beam whose ideal L1-RSRP is within 1dB of the ideal L1-RSRP of the Top-1 genie-aided beam

§ the definition of L1-RSRP difference of Top-1 predicted beam:

· the difference between the ideal L1-RSRP of Top-1 predicted beam and the ideal L1-RSRP of the Top-1 genie-aided beam

§ Other beam prediction accuracy related KPIs are not precluded and can be reported by companies.

o System performance related KPIs, may include the following options:

§ UE throughput: CDF of UE throughput, avg. and 5%ile UE throughput

§ RS overhead reduction at least for spatial-domain beam prediction at least for top-1 beam:

· 1-N/M,

o where N is the number of beams (with reference signal (SSB and/or CSI-RS)) required for measurement

o where (FFS) M is the total number of beams

o Note: Non-AI/ML approach based on the measurement of these M beams may be used as a baseline

· FFS on whether to define a proper value for M for evaluation.

§ Other System performance related KPIs are not precluded and can be reported by companies.

o Other KPIs are not precluded and can be reported by companies, for example:

§ Reporting overhead reduction: (FFS) The number of UCI report and UCI payload size, for temporal /spatial prediction

§ Latency reduction:

· (FFS) (1 [Total transmission time of N beams] / [Total transmission time of M beams])

o where N is the number of beams (with reference signal (SSB and/or CSI-RS)) in the input beam set required for measurement

o where M is the total number of beams

§ Power consumption reduction: FFS on details

Final summary in R1-2205641.

Including finalization of representative sub use cases (by RAN1#111) and discussions on potential specification impact.

R1-2203143 Discussion on AI/ML for beam management Huawei, HiSilicon

R1-2203251 Discussion on potential enhancements for AI/ML based beam management ZTE

R1-2203284 Discussions on AI-BM Ericsson

R1-2203375 Discussion for other aspects on AI/ML for beam management InterDigital, Inc.

R1-2203454 Discussion on other aspects on AI/ML for beam management CATT

R1-2203553 Other aspects on AI/ML for beam management vivo

R1-2203691 Discussion on other aspects on AI/ML for beam management NEC

R1-2203730 Consideration on AI/ML for beam management Sony

R1-2203811 Other aspects on AI/ML for beam management xiaomi

R1-2203900 Representative sub use cases for beam management Samsung

R1-2204018 Other aspects of AI/ML for beam management OPPO

R1-2204060 Beam management with AI/ML Beijing Jiaotong University

R1-2204078 Discussion on sub use cases of beam management Panasonic

R1-2204103 Discussion on sub use cases of AI/ML for beam management use case FUTUREWEI

R1-2204152 Other aspects on AI/ML for beam management LG Electronics

R1-2204183 Discussions on AI-ML for Beam management CAICT

R1-2204241 Enhancement on AI based Beam Management Apple

R1-2204298 Discussion on other aspects on AI/ML for beam management CMCC

R1-2204378 Discussion on other aspects on AI/ML for beam management NTT DOCOMO, INC.

R1-2204420 Further aspects of AI/ML for beam management Lenovo

R1-2204501 Discussion on other aspects on AI/ML for beam management Spreadtrum Communications

R1-2204569 Discussions on Sub-Use Cases in AI/ML for Beam Management TCL Communication

R1-2204574 Other aspects on ML for beam management Nokia, Nokia Shanghai Bell

R1-2204796 Use-cases and specification for beam management Intel Corporation

R1-2204843 On other aspects of AI and ML for beam management NVIDIA

R1-2204863 System performance aspects on AI/ML for beam management AT&T

R1-2204938 AI/ML for beam management Mavenir

R1-2205027 Other aspects on AIML for beam management Qualcomm Incorporated

R1-2205079 Sub-use cases and spec impact on AI/ML for beam management Fujitsu Limited

R1-2205094 Discussion on Codebook Enhancement with AI/ML Charter Communications, Inc

[109-e-R18-AI/ML-06] Zhihua (OPPO)

Email discussion on other aspects of AI/ML for beam management by May 20

- Check points: May 18

R1-2205252 Summary#1 for other aspects on AI/ML for beam management Moderator (OPPO)

R1-2205253 Summary#2 for other aspects on AI/ML for beam management Moderator (OPPO)

From May 17th GTW session

Agreement

For AI/ML-based beam management, support BM-Case1 and BM-Case2 for characterization and baseline performance evaluations

· BM-Case1: Spatial-domain DL beam prediction for Set A of beams based on measurement results of Set B of beams

· BM-Case2: Temporal DL beam prediction for Set A of beams based on the historic measurement results of Set B of beams

· FFS: details of BM-Case1 and BM-Case2

· FFS: other sub use cases

Note: For BM-Case1 and BM-Case2, Beams in Set A and Set B can be in the same Frequency Range

Agreement

Regarding the sub use case BM-Case2, the measurement results of K (K>=1) latest measurement instances are used for AI/ML model input:

· The value of K is up to companies

Agreement

Regarding the sub use case BM-Case2, AI/ML model output should be F predictions for F future time instances, where each prediction is for each time instance.

· At least F = 1

· The other value(s) of F is up to companies

Agreement

For the sub use case BM-Case1, consider both Alt.1 and Alt.2 for further study:

· Alt.1: AI/ML inference at NW side

· Alt.2: AI/ML inference at UE side

Agreement

For the sub use case BM-Case2, consider both Alt.1 and Alt.2 for further study:

· Alt.1: AI/ML inference at NW side

· Alt.2: AI/ML inference at UE side

R1-2205453 Summary#3 for other aspects on AI/ML for beam management Moderator (OPPO)

Decision: As per email decision posted on May 20th,

Conclusion

For the sub use case BM-Case1, consider the following alternatives for further study:

· Alt.1: Set B is a subset of Set A

o FFS: the number of beams in Set A and B

o FFS: how to determine Set B out of the beams in Set A (e.g., fixed pattern, random pattern, )

· Alt.2: Set A and Set B are different (e.g. Set A consists of narrow beams and Set B consists of wide beams)

o FFS: the number of beams in Set A and B

o FFS: QCL relation between beams in Set A and beams in Set B

o

FFS: construction of

Set B (e.g., regular pre-defined codebook, codebook other than regular

pre-defined one)

· Note1: Set A is for DL beam prediction and Set B is for DL beam measurement.

· Note2: The narrow and wide beam terminology is for SI discussion only and have no specification impact

· Note3: The codebook constructions of Set A and Set B can be clarified by the companies.

Conclusion

Regarding the sub use case BM-Case1, further study the following alternatives for AI/ML input:

· Alt.1: Only L1-RSRP measurement based on Set B

· Alt.2: L1-RSRP measurement based on Set B and assistance information

o FFS: Assistance information. The following were mentioned by companions in the discussion: Tx and/or Rx beam shape information (e.g., Tx and/or Rx beam pattern, Tx and/or Rx beam boresight direction (azimuth and elevation), 3dB beamwidth, etc.), expected Tx and/or Rx beam for the prediction (e.g., expected Tx and/or Rx angle, Tx and/or Rx beam ID for the prediction), UE position information, UE direction information, Tx beam usage information, UE orientation information, etc.

§ Note: The provision of assistance information may be infeasible due to the concern of disclosing proprietary information to the other side.

· Alt.3: CIR based on Set B

· Alt.4: L1-RSRP measurement based on Set B and the corresponding DL Tx and/or Rx beam ID

· Note1: It is up to companies to provide other alternative(s) including the combination of some alternatives

· Note2: All the inputs are nominal and only for discussion purpose.

Conclusion

For the sub use case BM-Case2, further study the following alternatives with potential down-selection:

· Alt.1: Set A and Set B are different (e.g. Set A consists of narrow beams and Set B consists of wide beams)

o FFS: QCL relation between beams in Set A and beams in Set B

· Alt.2: Set B is a subset of Set A (Set A and Set B are not the same)

o FFS: how to determine Set B out of the beams in Set A (e.g., fixed pattern, random pattern, )

· Alt.3: Set A and Set B are the same

· Note1: Predicted beam(s) are selected from Set A and measured beams used as input are selected from Set B.

· Note2: It is up to companies to provide other alternative(s)

· Note3: The narrow and wide beam terminology is for SI discussion only and have no specification impact

Conclusion

Regarding the sub use case BM-Case2, further study the following alternatives of measurement results for AI/ML input (for each past measurement instance):

· Alt.1: Only L1-RSRP measurement based on Set B

· Alt 2: L1-RSRP measurement based on Set B and assistance information

o FFS: Assistance information. The following were mentioned by companies in the discussion:, Tx and/or Rx beam angle, position information, UE direction information, positioning-related measurement (such as Multi-RTT), expected Tx and/or Rx beam/occasion for the prediction (e.g., expected Tx and/or Rx beam angle for the prediction, expected occasions of the prediction), Tx and/or Rx beam shape information (e.g., Tx and/or Rx beam pattern, Tx and/or Rx beam boresight directions (azimuth and elevation), 3dB beamwidth, etc.) , increase ratio of L1-RSRP for best N beams, UE orientation information

§ Note: The provision of assistance information may be infeasible due to the concern of disclosing proprietary information to the other side.

· Alt.3: L1-RSRP measurement based on Set B and the corresponding DL Tx and/or Rx beam ID

· Note1: It is up to companies to provide other alternative(s) including the combination of some alternatives

· Note2: All the inputs are nominal and only for discussion purpose.

Final summary in R1-2205454.

Including evaluation methodology, KPI, and performance evaluation results.

R1-2203554 Evaluation on AI/ML for positioning accuracy enhancement vivo

· Select the InF-DH scenario with clutter parameter {density 60%, height 6m, size 2m} as a typical scenario for positioning accuracy enhancement evaluation.

· Dataset and AI model sharing among different companies should be encouraged.

· For the purpose of link level and system level evaluation, statistical models (from TR 38.901 and TR 38.857) are utilized to generate dataset for AI/ML based positioning for model training/validation and testing.

o Field data measured in actual deployment for AI/ML model performance testing should be allowed and encouraged

· The positioning accuracy performance of AI/ML based positioning should be evaluated under all scenarios.

· Spatial consistency assumption should be adopted for performance evaluation.

· Performance related KPIs, such as @50%, @90% positioning accuracy defined in TR 38.857, can be used directly to evaluate the performance gain of AI/ML based positioning.

· Consider the following different levels of generalization performance for performance evaluation.

o Generalization performance form one cell to another

o Generalization performance from one one drop to another

o Generalization performance from one scenario to another

· Computational complexity, parameter quantity and training data requirement are three crucial cost-related KPIs for AI/ML based positioning, and should be considered with high priority at the beginning of this study .

· Support time domain CIR as the model input for AI/ML based positioning.

· Study further on the benefits of two-step positioning for AI/ML based positioning in terms of positioning accuracy and AI model generalization.

· Study further on the benefits of fine-tuning for AI/ML based positioning in terms of positioning accuracy and AI model generalization.

Decision: The document is noted.

R1-2203144 Evaluation on AI/ML for positioning accuracy enhancement Huawei, HiSilicon

Proposal 1: For AI/ML-based LOS/NLOS identification evaluation, adopt the normalized Power Delay Profile as the training inputs.

Proposal 2: For AI/ML-based fingerprint positioning evaluation, adopt the Channel Impulse Response as the training inputs.

Proposal 3: For AI/ML-based positioning evaluation, adopt the positioning accuracy and model complexity as the KPIs.

Proposal 4: For heavy NLOS scenarios, spatial consistent channel modeling shall be employed for the evaluation of AI/ML-based fingerprint positioning. Adopt one or both of the following concepts:

· 2D-Filtering method.

· Interpolation method.

Proposal 5: For AI/ML-based positioning evaluation, adopt IIoT scenario as baseline.

· A small number of gNB antennas should be evaluated.

Proposal 6: For AI/ML-based LOS/NLOS Identification evaluation, the baseline solution should be aligned with an existing traditional algorithm.

Proposal 7: For AI/ML-based positioning evaluation, training inputs generated from simulation platform should be a baseline.

Proposal 8: AI/ML-based fingerprint positioning should be studied for positioning accuracy enhancements under heavy NLOS conditions in Rel-18.

Proposal 9: For the evaluation of AI/ML-based fingerprint positioning, study the generalization of the AI/ML model for varying environments.

Decision: The document is noted.

R1-2203252 Evaluation assumptions on AI/ML for positioning ZTE

R1-2203285 Evaluations on AI-Pos Ericsson

R1-2203455 Discussion on evaluation on AI/ML for positioning CATT

R1-2203812 Initial views on the evaluation on AI/ML for positioning accuracy enhancement xiaomi

R1-2203901 Evaluation on AI ML for Positioning Samsung

R1-2204019 Evaluation methodology and preliminary results on AI/ML for positioning accuracy enhancement OPPO

R1-2204104 Discussion on evaluation of AI/ML for positioning accuracy enhancements use case FUTUREWEI

R1-2204153 Evaluation on AI/ML for positioning accuracy enhancement LG Electronics

R1-2204159 Evaluation assumptions and results for AI/ML based positioning InterDigital, Inc.

R1-2204184 Some discussions on evaluation on AI-ML for positioning accuracy enhancement CAICT

R1-2204242 Evaluation on AI/ML for positioning accuracy enhancement Apple

R1-2204299 Discussion on evaluation on AI/ML for positioning accuracy enhancement CMCC